How a lot is the factitious intelligence (AI) revolution altering the method of speaking science? With generative AI instruments comparable to ChatGPT enhancing so quickly, attitudes about utilizing them to write down analysis papers are additionally evolving. The variety of papers with indicators of AI use is rising quickly (D. Kobak et al. Preprint at arXiv https://doi.org/pkhp; 2024), elevating questions around plagiarism and other ethical concerns.

To seize a way of researchers’ considering on this subject, Nature posed a wide range of situations to some 5,000 teachers all over the world, to grasp which makes use of of AI are thought-about ethically acceptable.

Take Nature’s AI research test: find out how your ethics compare

The survey outcomes recommend that researchers are sharply divided on what they really feel are acceptable practices. Whereas teachers usually really feel it’s acceptable to make use of AI chatbots to assist to arrange manuscripts, comparatively few report truly utilizing AI for this function — and people who did typically say they didn’t disclose it.

Previous surveys reveal that researchers additionally use generative AI instruments to assist them with coding, to brainstorm analysis concepts and for a host of other tasks. In some instances, most within the tutorial neighborhood already agree that such purposes are both acceptable or, as within the case of generating AI images, unacceptable. Nature’s newest ballot centered on writing and reviewing manuscripts — areas during which the ethics aren’t as clear-cut.

A divided panorama

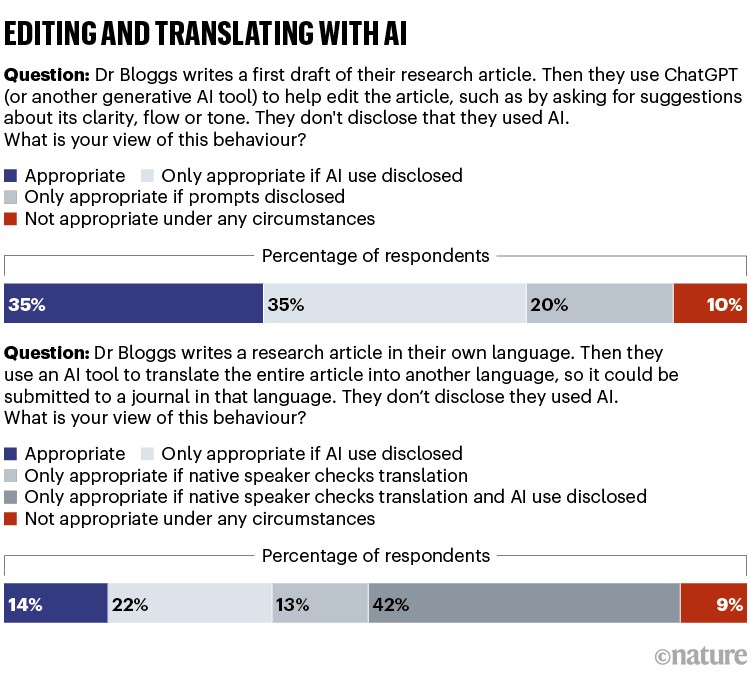

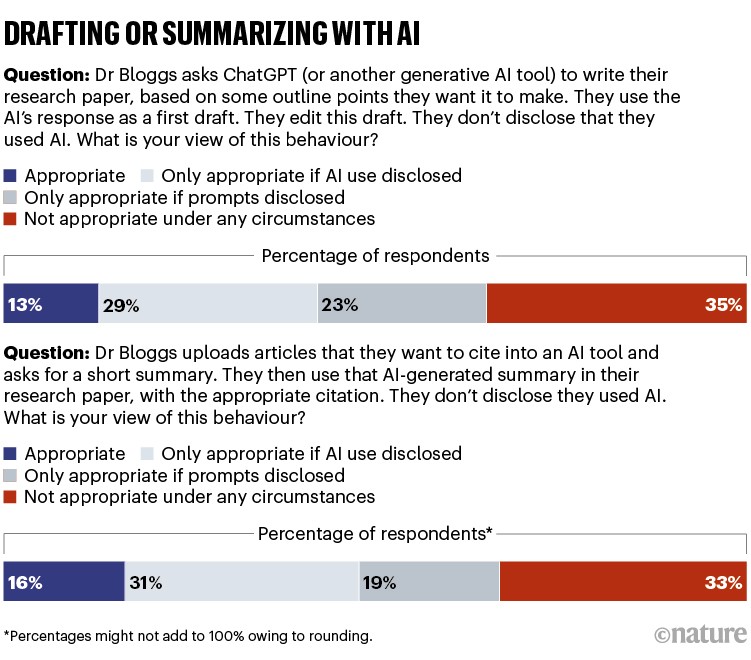

Nature’s survey laid out a number of situations during which a fictional tutorial, named Dr Bloggs, had used AI with out disclosing it — comparable to to generate the primary draft of a paper, to edit their very own draft, to craft particular sections of the paper and to translate a paper. Different situations concerned utilizing AI to write down a peer assessment or to supply strategies a couple of manuscript Dr Bloggs was reviewing (see Supplementary data for full survey, information and methodology, and you may as well test yourself against some of the survey questions).

Survey members had been requested what they thought was acceptable and whether or not they had used AI in these conditions, or could be keen to. They weren’t knowledgeable about journal insurance policies, as a result of the intent was to disclose researchers’ underlying opinions. The survey was nameless.

The 5,229 respondents had been contacted in March, by e-mails despatched to randomly chosen authors of analysis papers just lately printed worldwide and to some members in Springer Nature’s market-research panel of authors and reviewers, or by an invite from Nature’s each day briefing e-newsletter. They don’t essentially symbolize the views of researchers on the whole, due to inevitable response bias. Nonetheless, they had been drawn from all all over the world — of those that said a rustic, 21% had been from america, 10% from India and eight% from Germany, as an illustration — and symbolize varied profession phases and fields. (Authors in China are under-represented, primarily as a result of many didn’t reply to e-mail invites).

The survey means that present opinions on AI use differ amongst teachers — generally extensively. Most respondents (greater than 90%) assume it’s acceptable to make use of generative AI to edit one’s analysis paper or to translate it. However they differ on whether or not the AI use must be disclosed, and in what format: as an illustration, by a easy disclosure, or by giving particulars concerning the prompts given to an AI instrument.

In terms of producing textual content with AI —as an illustration, to write down all or a part of one’s paper — views are extra divided. Basically, a majority (65%) assume it’s ethically acceptable, however about one-third are towards it.

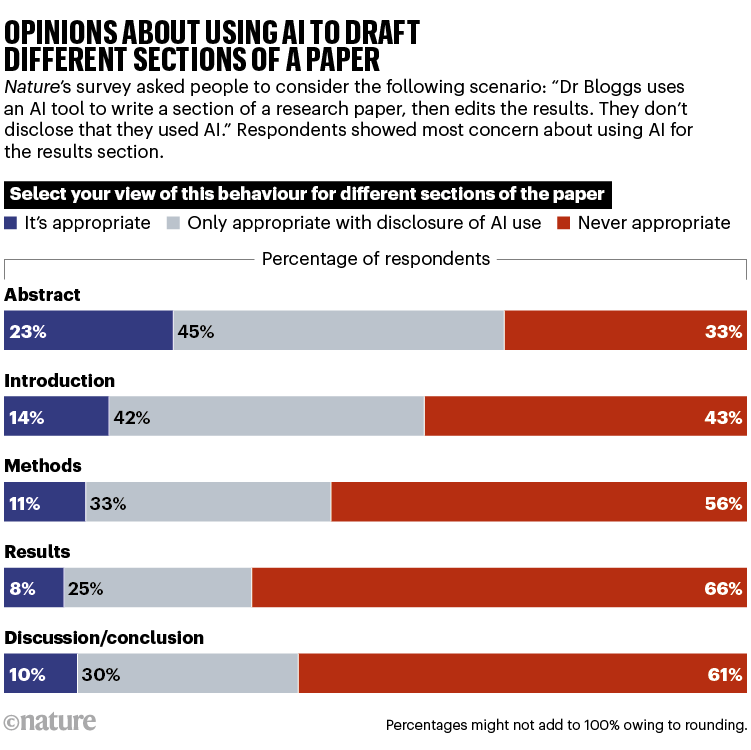

Requested about utilizing AI to draft particular sections of a paper, most researchers felt it was acceptable to do that for the paper’s summary, however extra had been against doing so for different sections.

Though publishers usually agree that substantive AI use in tutorial writing must be declared, the response from Nature’s survey means that not all researchers be in agreement, says Alex Glynn, a analysis literacy and communications teacher on the College of Louisville in Kentucky. “Does the disconnect mirror a scarcity of familiarity with the problem or a principled disagreement with the publishing neighborhood?”

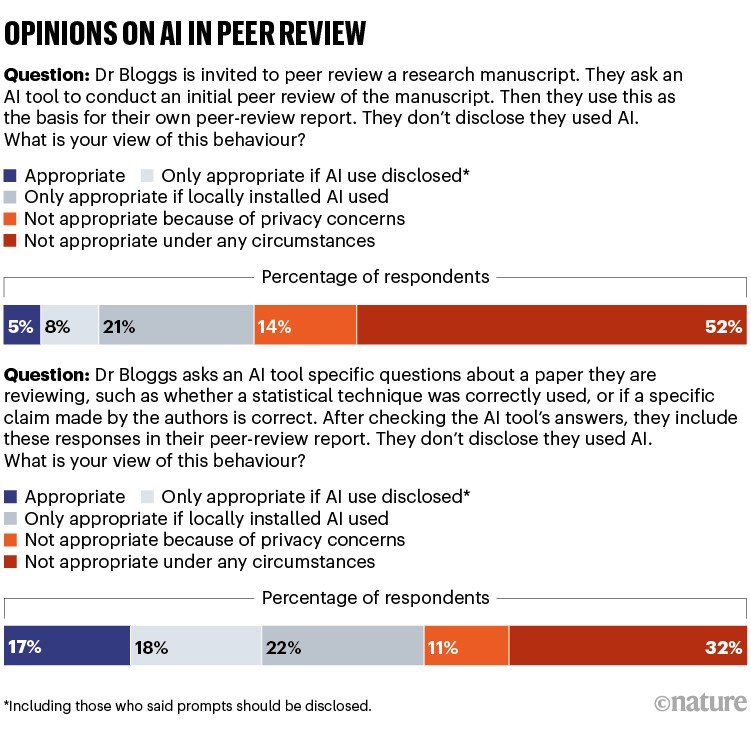

Utilizing AI to generate an preliminary peer-review report was extra frowned upon — with greater than 60% of respondents saying it was not acceptable (about one-quarter of those cited privateness issues). However the majority (57%) felt it was acceptable to make use of AI to help in peer assessment by answering questions on a manuscript.

“I’m glad to see individuals appear to assume utilizing AI to draft a peer-review report isn’t acceptable, however I’m extra stunned by the quantity of people that appear to assume AI help for human reviewers can also be out of bounds,” says Chris Leonard, a scholarly-communications guide who writes about developments in AI and peer assessment in his e-newsletter, Scalene. (Leonard additionally works as a director of product options at Cactus Communications, a multinational agency in Mumbai, India.) “That hybrid method is ideal to catch issues reviewers could have missed.”

AI nonetheless used solely by a minority

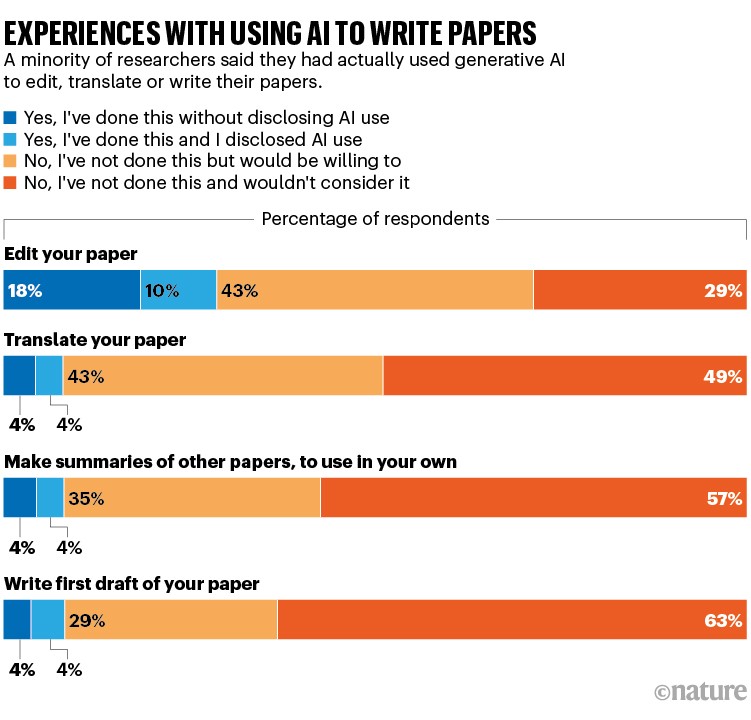

Basically, few teachers mentioned that they had truly used AI for the situations Nature posed. The preferred class was utilizing AI to edit one’s analysis paper, however solely round 28% mentioned that they had carried out this (one other 43%, nevertheless, mentioned they’d be keen to). These numbers dropped to round 8% for writing a primary draft, making summaries of different articles to be used in a single’s personal paper, translating a paper and supporting peer assessment.

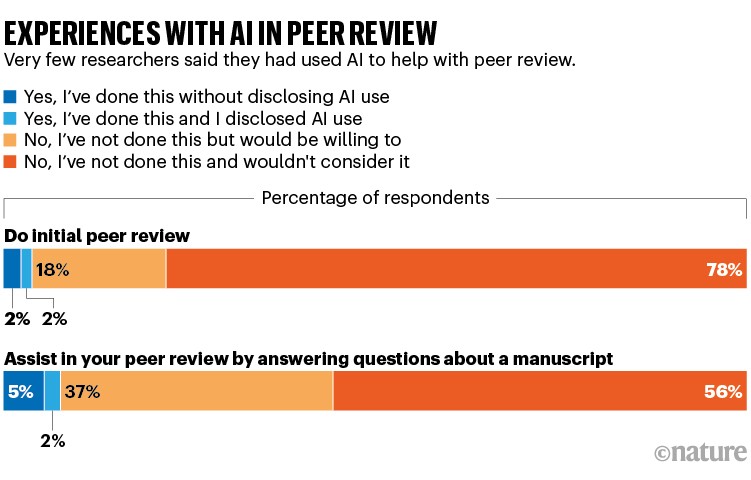

A mere 4% of respondents mentioned they’d used AI to conduct an preliminary peer assessment.

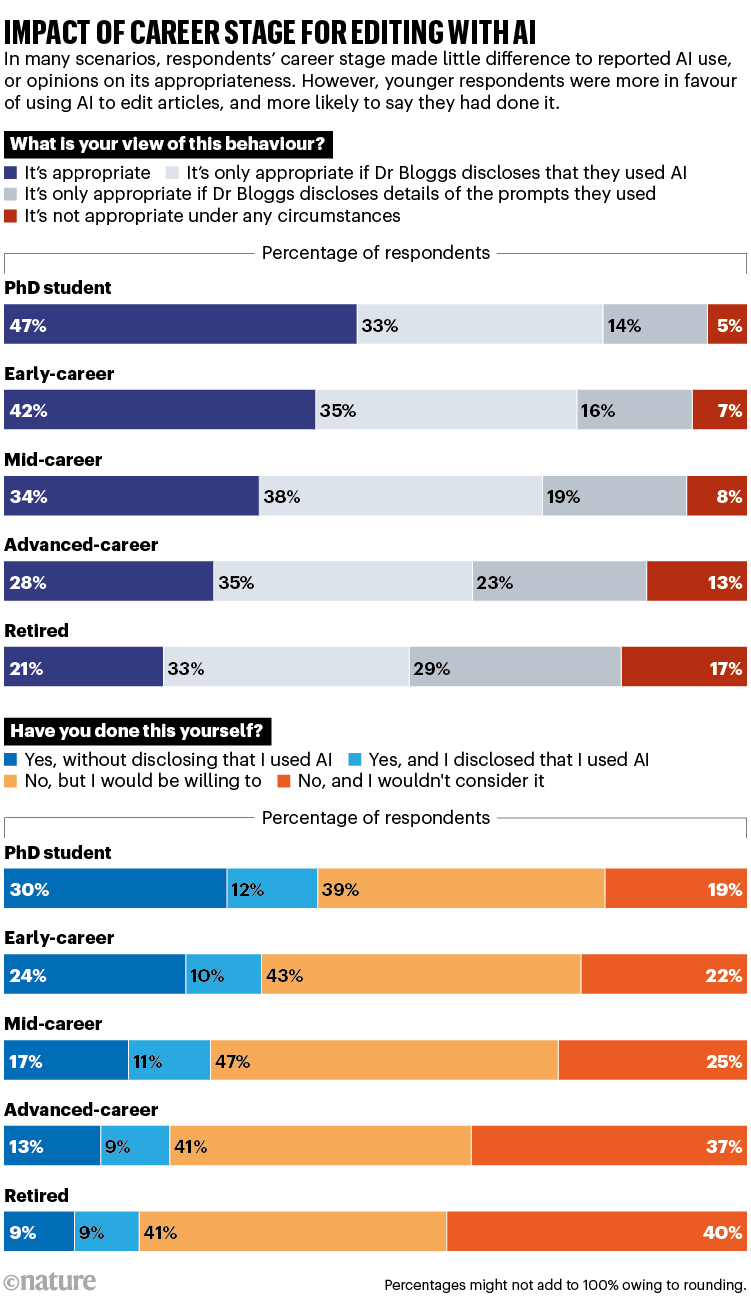

General, about 65% reported that that they had by no means used AI in any of the situations given, with individuals earlier of their careers being extra prone to have used AI a minimum of for one case. However when respondents did say that they had used AI, they most of the time mentioned they hadn’t disclosed it on the time.

“These outcomes validate what we’ve additionally heard from researchers — that there’s nice enthusiasm however low adoption of AI to assist the analysis course of,” says Josh Jarrett, a senior vice-president at Wiley, the multinational scholarly writer, which has additionally surveyed researchers about use of AI.

Cut up opinions

When given the chance to touch upon their views, researchers’ opinions diversified drastically. On the one hand, some mentioned that the broad adoption of generative AI instruments made disclosure pointless. “AI might be, if not already is, a norm identical to utilizing a calculator,” says Aisawan Petchlorlian, a biomedical researcher at Chulalongkorn College in Bangkok. “‘Disclosure’ is not going to be an essential difficulty.”

However, some mentioned that AI use would at all times be unacceptable. “I’ll by no means condone utilizing generative AI for writing or reviewing papers, it’s pathetic dishonest and fraud,” mentioned an Earth-sciences researcher in Canada.

AI is transforming peer review — and many scientists are worried

Others had been extra ambivalent. Daniel Egan, who research infectious illnesses on the College of Cambridge, UK, says that though AI is a time-saver and glorious at synthesizing complicated data from a number of sources, counting on it too closely can really feel like dishonest oneself. “By utilizing it, we rob ourselves of the alternatives to study by partaking with these generally laborious processes.”

Respondents additionally raised a wide range of issues, from moral questions round plagiarism and breaching belief and accountability within the publishing and peer-review course of to worries about AI’s environmental affect.

Some mentioned that though they typically accepted that the usage of these instruments might be moral, their very own expertise revealed that AI typically produced sub-par outcomes — false citations, inaccurate statements and, as one individual described it, “well-formulated crap”. Respondents additionally famous that the standard of an AI response may differ extensively relying on the precise instrument that was used.

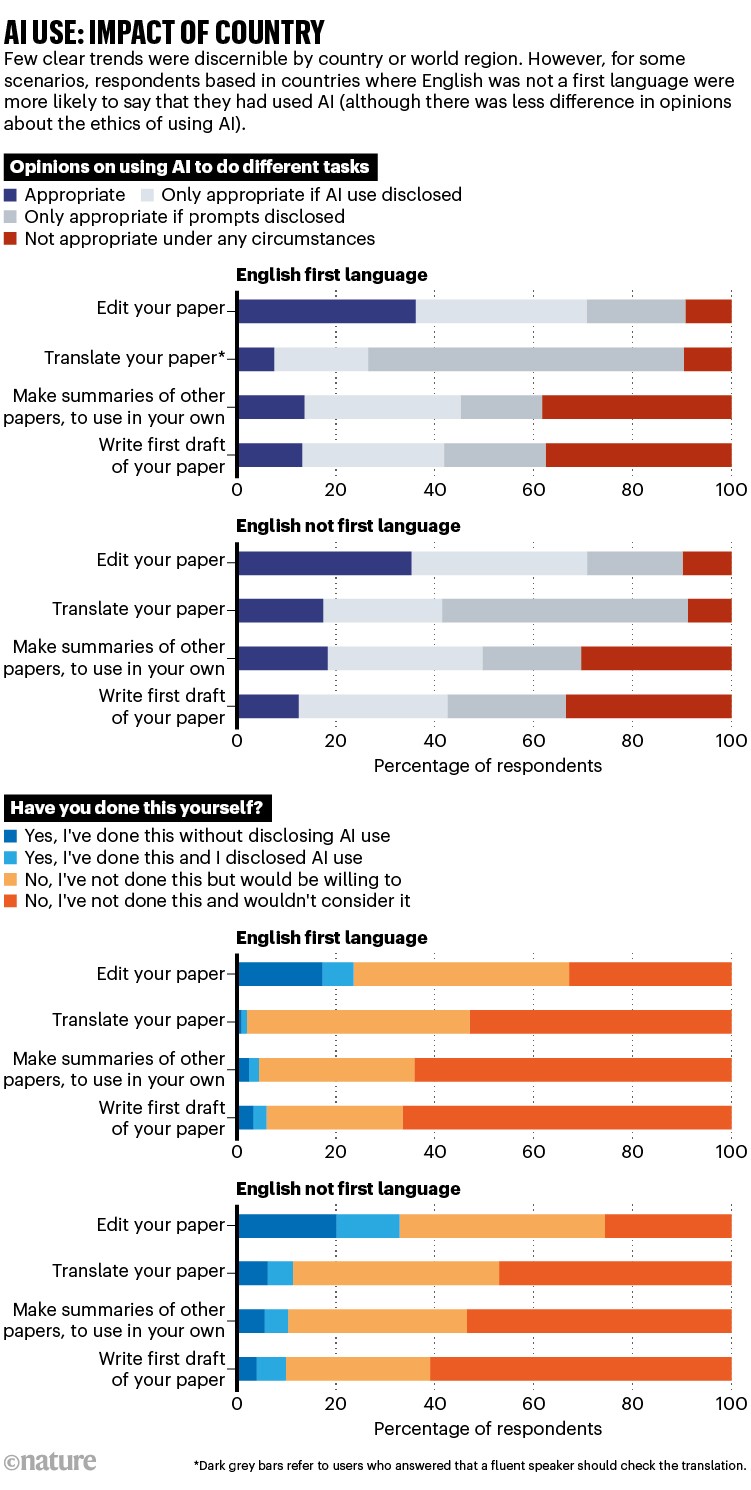

There have been additionally some positives: many respondents identified that AI may assist to stage the taking part in subject for teachers for whom English was not a primary language.

A number of additionally defined why they supported sure makes use of, however discovered others unacceptable. “I exploit AI to self-translate from Spanish to English and vice versa, complemented with intensive modifying of the textual content, however I might by no means use AI to generate work from scratch as a result of I benefit from the means of writing, modifying and reviewing,” says a humanities researcher from Spain. “And I might by no means use AI to assessment as a result of I might be horrified to be reviewed by AI.”

Profession stage and site

Maybe surprisingly, teachers’ opinions didn’t usually appear to vary extensively by their geographical location, analysis subject or profession stage. Nonetheless, respondents’ self-reported expertise with AI for writing or reviewing papers did correlate strongly with having beneficial opinions of the situations, as is likely to be anticipated.

Profession stage did appear to matter when it got here to the preferred use of AI — to edit papers. Right here, youthful researchers had been each extra prone to assume the apply acceptable, and extra prone to say that they had carried out it.

And respondents from nations the place English isn’t a primary language had been usually extra doubtless than these in English-speaking nations to have used AI within the situations. Their underlying opinions on the ethics of AI use, nevertheless, didn’t appear to vary significantly.

Associated surveys

Numerous researchers and publishers have performed surveys of AI use within the tutorial neighborhood, trying broadly at how AI is likely to be used within the scientific course of. In January, Jeremy Ng, a well being researcher on the Ottawa Hospital Analysis Institute in Canada, and his colleagues printed a survey of greater than 2,000 medical researchers, during which 45% of respondents mentioned that they had beforehand used AI chatbots (J. Y. Ng et al. Lancet Dig. Health 7, e94–e102; 2025). Of these, greater than two-thirds mentioned that they had used it for writing or modifying manuscripts — that means that, general, round 31% of the individuals surveyed had used AI for this function. That’s barely greater than in Nature’s survey.

Science sleuths flag hundreds of papers that use AI without disclosing it

“Our findings revealed enthusiasm, but in addition hesitation,” Ng says. “They actually bolstered the concept that there’s not a number of consensus round how, the place or for what these chatbots must be used for scientific analysis.”

In February, Wiley printed a survey inspecting AI use in academia by almost 5,000 researchers all over the world (see go.nature.com/438yngu). Amongst different findings, this revealed that researchers felt most makes use of of AI (comparable to writing up documentation and growing the velocity and ease of peer assessment) could be generally accepted within the subsequent few years. However lower than half of the respondents mentioned that they had truly used AI for work, with 40% saying they’d used it for translation and 38% for proofreading or modifying of papers.