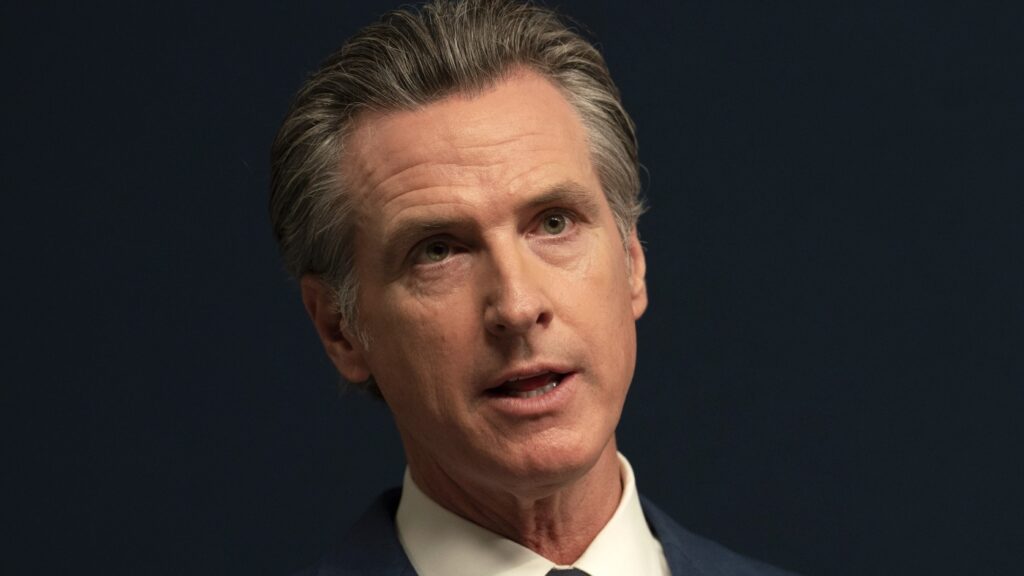

FILE — California Gov. Gavin Newsom vetoed SB1046, a hotly contested measure that will have been the nation’s strictest AI security regulation. (AP Photograph/Wealthy Pedroncelli, File)

Wealthy Pedroncelli/AP/FR171957 AP

disguise caption

toggle caption

Wealthy Pedroncelli/AP/FR171957 AP

Gov. Gavin Newsom of California on Sunday vetoed a invoice that will have enacted the nation’s most far-reaching rules on the booming synthetic intelligence business.

California legislators overwhelmingly handed the invoice, referred to as SB 1047, which was seen as a possible blueprint for nationwide AI laws.

The measure would have made tech corporations legally responsible for harms brought on by AI fashions. As well as, the invoice would have required tech corporations to allow a “kill swap” for AI know-how within the occasion the methods had been misused or went rogue.

Newsom described the invoice as “well-intentioned,” however famous that its necessities would have referred to as for “stringent” rules that will have been onerous for the state’s main synthetic intelligence corporations.

In his veto message, Newsom stated the invoice targeted an excessive amount of on the largest and strongest AI fashions, saying smaller upstarts may show to be simply as disruptive.

“Smaller, specialised fashions might emerge as equally or much more harmful than the fashions focused by SB 1047 — on the potential expense of curbing the very innovation that fuels development in favor of the general public good,” Newsom wrote.

California Senator Scott Wiener, a co-author of the invoice, criticized Newsom’s transfer, saying the veto is a setback for synthetic intelligence accountability.

“This veto leaves us with the troubling actuality that corporations aiming to create a particularly highly effective know-how face no binding restrictions from U.S. policymakers, significantly given Congress’s persevering with paralysis round regulating the tech business in any significant approach,” Wiener wrote on X.

The now-killed invoice would have compelled the business to conduct security checks on massively highly effective AI fashions. With out such necessities, Wiener wrote on Sunday, the business is left policing itself.

“Whereas the massive AI labs have made admirable commitments to watch and mitigate these dangers, the reality is that the voluntary commitments from business usually are not enforceable and barely work out effectively for the general public.”

Many highly effective gamers in Silicon Valley, together with enterprise capital agency Andreessen Horowitz, OpenAI and commerce teams representing Google and Meta, lobbied towards the invoice, arguing it might sluggish the event of AI and stifle development for early-stage corporations.

“SB 1047 would threaten that development, sluggish the tempo of innovation, and lead California’s world-class engineers and entrepreneurs to go away the state searching for better alternative elsewhere,” OpenAI’s Chief Technique Officer Jason Kwon wrote in a letter despatched final month to Wiener.

Different tech leaders, nonetheless, backed the invoice, together with Elon Musk and pioneering AI scientists like Geoffrey Hinton and Yoshua Bengio, who signed a letter urging Newsom to signal it.

“We imagine that essentially the most highly effective AI fashions might quickly pose extreme dangers, akin to expanded entry to organic weapons and cyberattacks on crucial infrastructure. It’s possible and acceptable for frontier AI corporations to check whether or not essentially the most highly effective AI fashions could cause extreme harms, and for these corporations to implement cheap safeguards towards such dangers,” wrote Hinton and dozens of former and present staff of main AI corporations.

On Sunday, in his X publish, Wiener referred to as the veto a “setback” for “everybody who believes in oversight of large firms which can be making crucial selections that have an effect on the security and welfare of the general public.”

Different states, like Colorado and Utah, have enacted legal guidelines extra narrowly tailor-made to deal with how AI may perpetuate bias in employment and health-care selections, in addition to different AI-related client safety issues.

Newsom has not too long ago signed different AI payments into regulation, together with one to crack down on the unfold of deepfakes throughout elections. One other protects actors towards their likenesses being replicated by AI with out their consent.

As billions of {dollars} pour into the event of AI, and because it permeates extra corners of on a regular basis life, lawmakers in Washington nonetheless haven’t proposed a single piece of federal laws to guard individuals from its potential harms, nor to supply oversight of its speedy growth.